NO.216 Human-Computer-Interfaces x Neuroscience

June 23 - 26, 2025 (Check-in: June 22, 2025 )

Organizers

- Pedro Lopes

- University of Chicago, USA

- Shunichi Kasahara

- Sony Computer Science Laboratories, Japan

- Takefumi Hiraki

- Metaverse Lab / Cluster, Inc., Japan

- Shuntaro Sasai

- Araya Inc. , Japan

Overview

Meeting abstract

Computer science is brimming with innovation in how everyday users access computers. In only a few decades, computers went from highly specialized tools to everyday tools—innovation driven by researchers and companies in Human Computer Interaction (HCI). In fact, no other recent tool has experienced this widespread adoption—computers are used nearly anytime & anywhere and by nearly everyone. However, while computer scientists are striving to create new interface paradigms that improve the way we communicate with computers (evolution from desktop, to mobile and now to wearable), we are close to hitting a hard barrier when it comes to new computer interfaces: the complexity of the human body & biology. It is undeniable that the last generations of computer interfaces are closer and closer to the human body (e.g., wearables, brain computer interfaces, VR/AR), but designing these requires more than just knowledge from computer science—it requires neuroscience. As such, in this Shonan Meeting, we propose a intersection of Computer Science and Neuroscience, in what we call “HCI meets Neuroscience”—our “meets” term is denoted as “x” to imply the multiplication that will happen once we combine our expertise to advance Computer Science.

Meeting description

Introduction. In only a few decades, computers went from highly specialized tools to everyday tools—innovation driven by researchers & industry in Human Computer Interaction (HCI). No other modern tool has experienced this widespread adoption—computers are used nearly anytime & anywhere and by nearly everyone. But this did not happen overnight and to achieve massive adoption, it was not only the hardware and software that needed to improve, but especially: the user interface.

Evolution of the interface. In the early days of computing, computers took up entire rooms and interacting with them was slow and sparse—users inputted commands to the computer in the form of text (written via punch-cards and later via terminals) and received computations after minutes or hours (in the form of printed text). The result was that computers stayed as specialized tools that required tremendous expertise to operate. To enter an era of wider adoption, many advances were needed (e.g., miniaturization of electronic components), especially, the invention of a new type of human-computer interface that allowed for a more expressive interaction—the graphical user interface (with its graphical user interface elements, many of which we still use today, e.g, icons, folders, desktop, mouse pointer) [1]. This revolution led to a proliferation of computers as office-support tools—because interactions were much faster and the interface feedback was immediate, users were now spending eight hours a day with a computer, rather than just a few minutes while entering/reading text. Still, computers were stationary and users never carried desktop computers around. More recently, not only the steady miniaturization of components boosted portability, but a new interface revolutionized the way we interact: the touchscreen—by touching a dynamic screen rather than using fixed

keys it enable an extreme miniaturization of the interface component (no keyboard needed); leading to the most widespread device in history: the smartphone. Via smartphones users no longer used computers at their workplace but carried these around all the waking hours of the day and used these anytime and anywhere.

What drives interface revolutions? We posit that three factors are driving interfaces: (1) hardware miniaturization—a corollary of Moore’s law; (2) maximizing the user interface—almost every single inch of a computer is now used for user I/O, e.g., virtually all the formfactor of a smartphone is touch-sensitive; and, (3) interfaces are closer to the user’s body—this is best demonstrated by the fact that early users of mainframe computers interacted with machines only for a brief period of the day, while modern users interact with computers for 10+ hours a day, including work and leisure .

Devices integrating with the user’s body. The revolution of the interface did not end here, and recently, we can observe these three trends being extrapolated again as a new interface paradigm is being actualized in the mainstream: the wearable devices (e.g., smartwatches, VR/AR, etc). To arrive at this type of interactive device, (1) the hardware was miniaturized (especially MEMS), (2) the interface was maximized, especially by using motion-sensors, 3D-cameras and haptic actuators, new interaction modalities were unlocked such as gestures and rich haptic feedback (e.g., vibration), and (3) the new interface was designed to be even closer to users, physically touching their skin, allowing them to wear a computer 24h a day—in fact, wearables excel at tasks where smartphones cannot, such as determining a user’s physiological state (e.g., heartbeat, O2 levels, etc.) or extremely fast interactions (e.g., sending a message to a loved one while jogging).

Extrapolating this evolution as a starting point of our meeting. At this point, we can posit this evolution of computers in a diagrammatic form, which we depict in figure below (taken from Lopes et. al [2]) and, we ask ourselves the a pressing question for the field of computing: what shape will the next type of interface take? If we extrapolate our three arguments (i.e., smaller hardware, maximize I/O, and closer to user’s body), then we can see in the horizon that the computer’s interface will integrate directly with the user’s biological body.

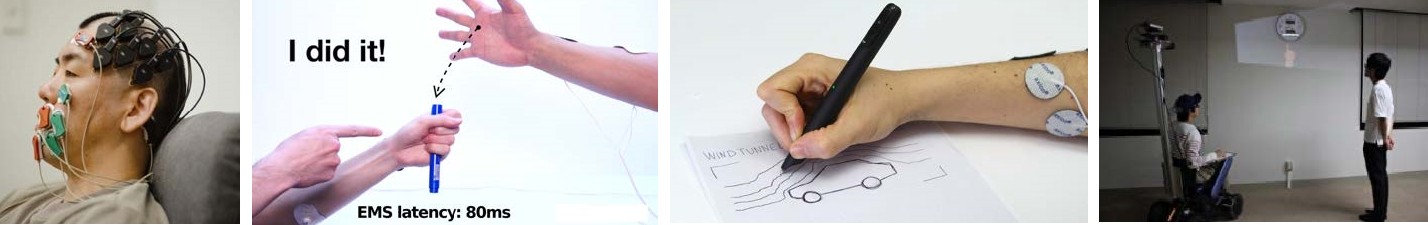

In fact, in the figure below we illustrate this idea by depicting four recent interfaces—all done by the organizing team—the unifying trend among these is that the interface uses part of the user’s body to accomplish its function, such as (from left to right): (1) a EMG-and-EEG interface by Sasai [3], capable of reading brain and facial signals; (2) an interface that uses electrical muscle stimulation to accelerate the user’s reaction time [4-5] (by Kasahara and Lopes); (3) an interface that can alter the way a user draws on paper, by controlling the user’s arm with electrical muscle stimulation [6] (by Lopes); and, (4) an interface that allows a wheelchair user to control it, plus additional cursors, using their muscles [7] (by Hiraki).

Why this meeting? While the previous interface revolutions (desktop, mobile, wearable) could be solved by computer engineers & human-computer interaction researchers, this next revolution requires a new expertise— it requires neuroscience. As we close on a hard barrier, the complexity of the human body & biology, we need to turn to our colleagues in Neuroscience to forma. deeper and shared understanding of how to design these neural interfaces (e.g., those that interface with brain signals, muscle signals, pain signals, etc.). As such, in this Shonan Meeting, we propose a Meeting to explore the intersection of Computer Science and Neuroscience, in what we call “HCI meets Neuroscience”—our “meets” term is denoted as “x” to imply the multiplication that will happen once we combine our expertise to advance Computer Science.

Participants background. We believe that this is a step towards the science of human computer integration and that Neuroscience will accelerate new computer interfaces, and HCI accelerate new neuroscience findings with new hardware/interfaces. We plan our meeting to plant the seed for a symbiotic relationship between HCI & Neuroscience. As such, invite participants from diverse cultural backgrounds, but also most participants also have a different and unique research field that will complement the discussions during the meeting by offering perspectives from CS and Neuroscience.

References

[1] Umer Farooq and Jonathan Grudin. 2016. Human-computer integration. interactions 23, 6 (November-December 2016), 26–32. https://doi.org/10.1145/3001896

[2] Florian Floyd Mueller, Pedro Lopes, et a.. 2020. Next Steps for Human-Computer Integration. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (CHI '20). Association for Computing Machinery, New York, NY, USA, 1–15. https://doi.org/10.1145/3313831.3376242

[3] Sasai S, Homae F, Watanabe H, Sasaki AT, Tanabe HC, Sadato N, Taga G (2014) Frequency-specific network topologies in the resting human brain. Frontiers in human neuroscience, 8:1022.

[4] Daisuke Tajima, Jun Nishida, Pedro Lopes, and Shunichi Kasahara. 2022. Whose Touch is This?: Understanding the Agency Trade-Off Between User-Driven Touch vs. Computer-Driven Touch. ACM Trans. Comput.-Hum. Interact. 29, 3, Article 24 (June 2022), 27 pages. https://doi.org/10.1145/3489608

[5] Shunichi Kasahara, Jun Nishida, and Pedro Lopes. 2019. Preemptive Action: Accelerating Human Reaction using Electrical Muscle Stimulation Without Compromising Agency. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI '19). Association for Computing Machinery, New York, NY, USA, Paper 643, 1–15. https://doi.org/10.1145/3290605.3300873

[6] Pedro Lopes, Doga Yüksel, François Guimbretière, and Patrick Baudisch. 2016. Muscle-plotter: An Interactive System based on Electrical Muscle Stimulation that Produces Spatial Output. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology (UIST '16). Association for Computing Machinery, New York, NY, USA, 207–217. https://doi.org/10.1145/2984511.2984530

[7] Kohei Morita, Takefumi Hiraki, Haruka Matsukura, Daisuke Iwai & Kosuke Sato (2021) Head orientation control of projection area for projected virtual hand interface on wheelchair, SICE Journal of Control, Measurement, and System Integration, 14:1, 223-232, DOI: 10.1080/18824889.2021.1964785

Topics

Human-computer Interfaces: especially wearable and body-integrated interfaces, including electrical-muscle-stimulation, functional-muscle-stimulation, brain-sensing, muscle-sensing, movement-sensing, and translation of these interfaces to the public.

Computing platforms & implementation for neuro-interfaces: software and hardware implementations & algorithms for neuro data processing and real-time feedback.

Input-output bandwidth: including the understanding of typing speed, speech production speed, thought speed, movement/reaction-time speed, and so forth.

Brain-computer interfaces: including EEG, fMRI, MEG, fNRIS, mobile-EEG technologies and their translation into industrial projects and/or computing interfaces.

Muscle-computer interfaces: including surface-based EMG, wearable tomography technologies and their translation into industrial projects and/or computing interfaces.

Sense of agency when using computer interfaces: including the study of sense of agency and how consciousness is altered during extended use of computer interfaces.

Theories of neuroscience applicable to the design of Computer Interfaces: including predictive coding (applicable to I/O interfaces), sense of agency (applicable to wearable interfaces), body ownership & body schema (applicable to VR/AR interfaces), visual illusions (applicable to VR/AR interfaces), and their translation from Neuroscience to HCI and vice-versa.