NO.187 Theoretical Foundations of Nonvolatile Memory

July 1 - 4, 2024 (Check-in: June 30, 2024 )

Organizers

- Martin Farach-Colton

- New York University, USA

- Sam H. Noh

- Virginia Tech, USA

- Gala Yadgar

- Technion, Israel

Overview

Description of the Meeting

In the days of hard disks, a single I/O took several milliseconds, whereas a single CPU instruction took roughly only one nanosecond. Furthermore, effectively utilizing disk bandwidth required performing large I/Os, whereas RAM could be accessed at byte granularity.

Because RAM and disks were so different in their performance characteristics, research in algorithms and data structures bifurcated into the RAM and Disk-Access Machine (DAM) models. In the RAM model, the goal is to minimize the number of instructions executed. In the DAM model, all data is stored on disk and must be brought into RAM when needed. The goal in the DAM model is to minimize the number of I/Os performed.

In the stark world of RAM and hard disks, these models were good at capturing the most important performance features of algorithms and data structures. However, we are currently in the middle of multiple upheavals in storage hardware.

On the storage side, devices are getting faster, they now have internal parallelism, and they are becoming more diverse. For example, a cutting edge NVMe drive may have a latency of only 10µsecs, which is only about 100 times slower than RAM. Furthermore, NVMe storage bandwidth is now approaching 10GB/sec on very recent devices, which is approximately 100 times faster than a typical hard drive.

Furthermore, locality is being replaced by parallelism. On a hard drive, the way to get good performance is locality, i.e. performing large I/Os. On solid state storage devices, the way to get good performance is parallelism, i.e. to present many I/Os to the device at the same time. On some devices, locality offers essentially no additional performance benefit, i.e. a highly concurrent random I/O workload will be just as fast as a workload of large, sequential I/Os.

The big picture is that storage hardware is getting faster, more complex, more concurrent, and more diverse. Furthermore, the gap between each level of the memory hierarchy is narrowing. These small but non-trivial performance gaps put both the RAM and DAM models on shaky ground.

These are not just theoretical problems. For example, most (if not all) high-performance key-value stores are CPU bound on NVMe devices, even when they have numerous computing cores at their disposal. We recently built a new key-value store specifically designed to be able to fully utilize the performance of high- end NVMe storage. We had to revisit every level of the software stack, from caches and locks all the way up to our sorting algorithms, in order to be able to drive the device at its full potential hardware.

Objectives

We propose to bring together members of the architecture, operating systems and algorithms communities. Our workshop will follow a standard format of talks and open problem sessions, but with some changes. We will encourage attendees to prepare talks suitable for a general CS audience. We will also use “Say What?” sessions, which is a technique we’ve used in theory/systems workshops in the past. During a previous workshop, any time a presenter would use a term that some members of the audience didn’t understand, the audience members were encouraged to say “Say What?” Then the term was added to the “Say What?” Board. We then has sessions in which systems researchers would explain the systems terms form the “Say What?” Board to the theoreticians, and vice versa.

In addition, some people stood out at previous theory/systems workshops as bridges between communities and as com- munication facilitators. Although we will seek to mix up the attendance to this workshop, we will strive to include these facilitators.

Our main goal is to catalyze the formation of cross-disciplinary groups who can both create the new models needed for understanding modern hardware and use those models for many concrete systems-level advances.

The storage system world is in a ferment as new hardware becomes available. Now is the time to establish deep partnerships across disciplines in computer science to solve some of the most pressing big data infrastructure problems.

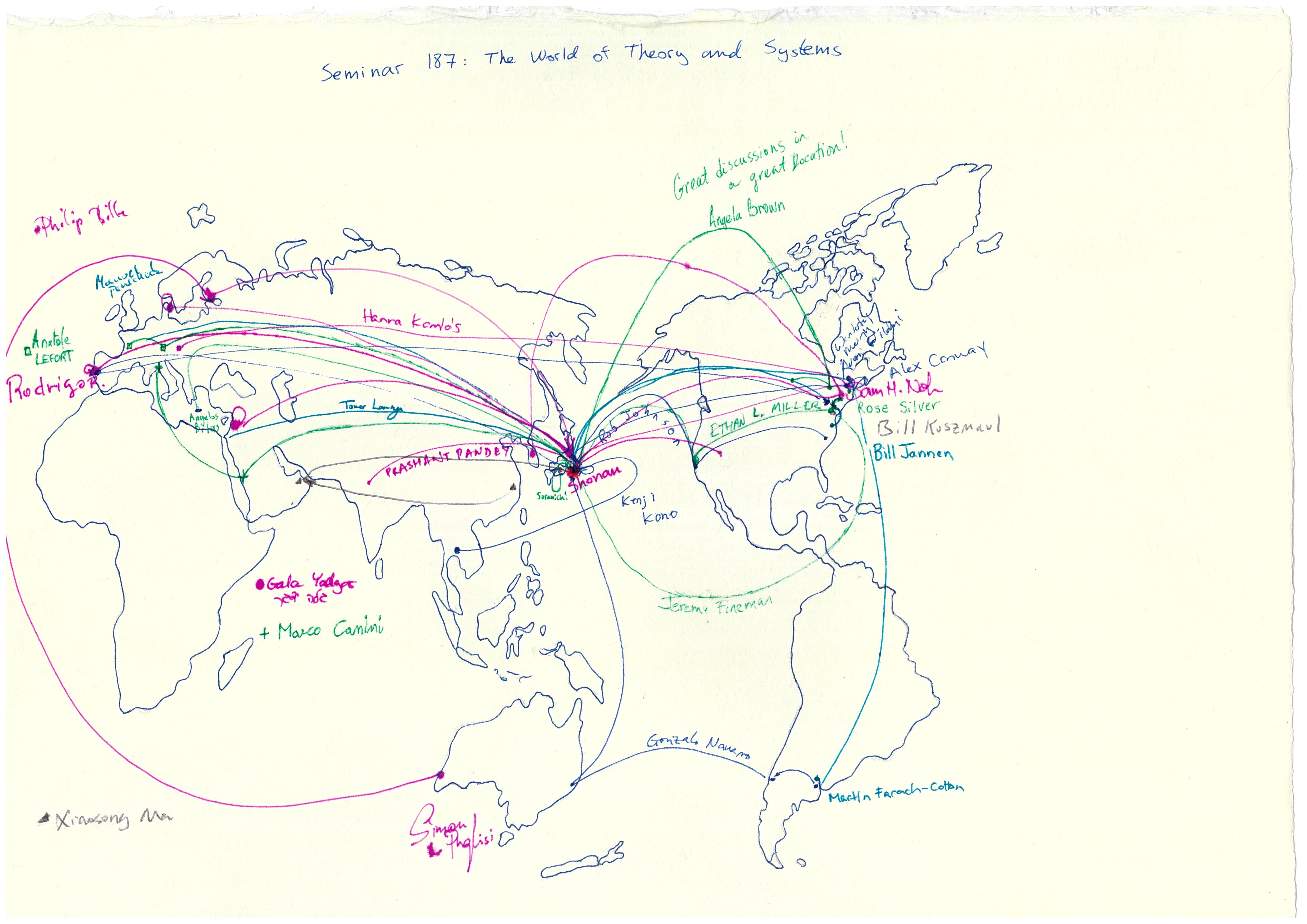

Message Board